What is Who Codes Best?

Who Codes Best is a comprehensive platform offering analysis, comparison, and benchmarking of AI coding assistants. It tests the capabilities of major AI models to generate and review code, scoring them based on performance. It also offers a systematic method for comparison, the CodeComparison function, which assesses and returns the best performing model, enabling users to select their preferred AI based on speed, quality, and cost. It also features an overview of popular AI models and AI coding agents, ranked code samples generated, and a section for news and updates enhancing user awareness about latest AI coding model releases and benchmarks.

What are some core features of Who Codes Best?

Core features of Who Codes Best include a method to compare, analyze, and score major AI models, AI benchmarking, providing code quality analysis along with speed and cost effectiveness analysis, providing ranked code samples generated by AI models and agents, collecting and presenting user comparison data, offering a section dedicated to AI news and updates, conducting coding task testing to evaluate AI model performance, and supporting user informed choice and AI-based decision making.

How can I test coding tasks across different AI models on Who Codes Best?

On Who Codes Best, users can test coding tasks across different AI models by providing specific coding tasks which will be processed by the different AI models on the platform. The platform then assesses the generated code in terms of speed, quality, and cost-effectiveness, allowing the user to compare and make an informed choice.

Can I compare speed, quality, and cost of various AI models on Who Codes Best?

Yes, on Who Codes Best, you have the possibility to compare speed, quality, and cost of various AI models. The platform's comparison metrics enable users to make an informed choice regarding the best suited AI model for their requirements.

What AI models are evaluated in Who Codes Best like 'Claude 3.5' from Anthropic, 'GPT-4o' from OpenAI, and 'Gemini Pro' from Google?

Who Codes Best evaluates AI models like 'Claude 3.5' from Anthropic, 'GPT-4o' from OpenAI, and 'Gemini Pro' from Google among others. It comprehensively tests the capabilities of these models to generate and review code. The models are benchmarked and scored using the platform's proprietary methodology to establish their performance levels.

What is the special function of AI Benchmarking on Who Codes Best?

AI Benchmarking on Who Codes Best serves a key function of evaluating the performance of various AI models. It tests the capabilities of these models by assigning them coding tasks and gauges their performance in terms of speed, quality, and cost-effectiveness. The results from these benchmarks are then used to rank and compare the models, helping users make informed decisions.

How are AI models ranked on Who Codes Best?

AI models are ranked on Who Codes Best based on their performance in generating and reviewing code. The platform uses a benchmarking method to test the capacities of the models, and scores them accordingly. These scores form the basis for ranking the models. The process involves comparison of speed, quality, and cost, among other factors.

How can I select the best performing model with Who Codes Best?

Who Codes Best enables users to select the best performing model using its CodeComparison function. Once the models are evaluated based on their ability to generate and review code, the comparison function ranks them according to their score. The model with the highest score, indicating superior performance, is then identified as the best performing model.

Does Who Codes Best provide code samples generated by AI models?

Yes, Who Codes Best provides code samples generated by AI models. These are ranked code samples representing the capabilities of popular AI models and AI coding agents, allowing users to compare their effectiveness and quality.

Where can I find news and updates about AI coding on Who Codes Best?

News and updates about AI coding can be found within a dedicated section on Who Codes Best. This section provides users with the most up-to-date information regarding AI coding model releases, benchmarks, and other relevant news.

What makes Who Codes Best a reliable platform for AI comparison?

Who Codes Best is a reliable platform for AI comparison due to its comprehensive methodology which uses real-world testing data. It provides a transparent evaluation of major AI models, testing their capabilities to generate and review code. Moreover, the platform offers ranked code samples and upholds user informed choice by allowing for speed, quality, and cost comparison, thereby aiding in decision-making.

How does speed analysis work on Who Codes Best?

Speed analysis on Who Codes Best works by testing the time efficiency of different AI models during the code generation process. Models are given the same coding task and the speed at which they produce code is measured and compared, contributing to their overall performance score.

Does Who Codes Best offer a section for AI news and updates?

Yes, Who Codes Best offers a dedicated section for AI news and updates. The purpose of this section is to keep users informed about the latest releases and benchmarks in the AI coding model landscape.

Is there any cost effectiveness analysis provided by Who Codes Best?

Yes, Who Codes Best provides a cost-effectiveness analysis of AI models. This involves comparing the cost of using different AI models and relating that to the quality and speed of their performance, enabling users to choose the most economically viable option without compromising on the quality and speed of generated code.

Are there any functionalities related to AI-based decision making in Who Codes Best?

Yes, Who Codes Best incorporates functionalities related to AI-based decision making, notably through its CodeComparison function. This performance assessment and comparison tool helps users to choose the most suitable AI model for their coding purposes.

Where can I find user generated reviews for AI models on Who Codes Best?

Reviews for AI models on Who Codes Best can be found through the ranked code samples provided by the platform. These ranks, influenced by users' assessments as well as the platform's analysis results, serve as an effective review system for the different AI models.

Does Who Codes Best provide an overview of the AI coding market?

Yes, Who Codes Best provides an overview of the AI coding market. By benchmarking, comparing and ranking a multitude of AI models and agents, users get a panoramic view of the options available, their capabilities, performance scores, and user reviews.

What is the purpose of the Coding Task Testing feature on Who Codes Best?

The purpose of the Coding Task Testing feature on Who Codes Best is to evaluate the performance of different AI models. It involves processing specific coding tasks through different AI models and assessing the resulting code. This feature allows users to test the capabilities of AI models and compare their speed, quality and cost-effectiveness.

Base44 is an AI-powered platform for building fully-functional apps with no code and minimal setup hassle. The platform leverages advanced AI technology to translate simple, natural language descriptions into working apps. Let’s make your dream a reality. Right now.

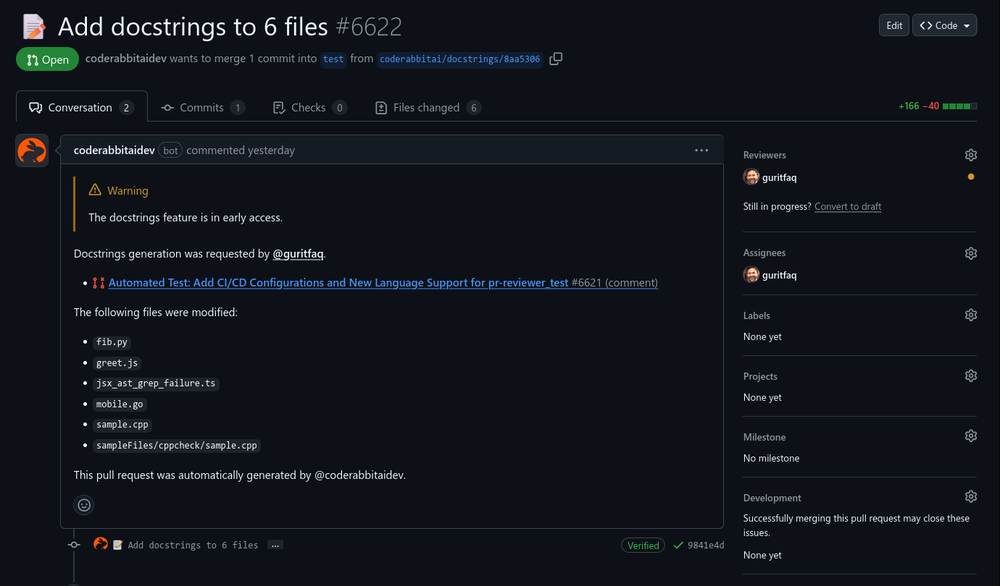

Base44 is an AI-powered platform for building fully-functional apps with no code and minimal setup hassle. The platform leverages advanced AI technology to translate simple, natural language descriptions into working apps. Let’s make your dream a reality. Right now. Reducing manual efforts in first-pass during code-review process helps speed up the "final check" before merging PRs

Reducing manual efforts in first-pass during code-review process helps speed up the "final check" before merging PRs Would rate 4.9 if possible, but rounding up to 5 stars because this app truly excels compared to other AI coding tools. Why 5 Stars: Best-in-class AI coding assistance Huge improvements over competitors Actually works for real development Real Impact: I successfully built and published an actual app using this tool - that's game-changing for non-developers like me. Bottom Line: Yes, there's room for improvement, but this is already the top AI coding app available. The fact that ordinary people can create real apps with it says everything. Perfect for anyone wanting to turn ideas into actual apps!

Would rate 4.9 if possible, but rounding up to 5 stars because this app truly excels compared to other AI coding tools. Why 5 Stars: Best-in-class AI coding assistance Huge improvements over competitors Actually works for real development Real Impact: I successfully built and published an actual app using this tool - that's game-changing for non-developers like me. Bottom Line: Yes, there's room for improvement, but this is already the top AI coding app available. The fact that ordinary people can create real apps with it says everything. Perfect for anyone wanting to turn ideas into actual apps! Really liked the performance dashboard features. Very unique. Also the output was very polished, ready to deploy. Did not have to use Supabase - though would like to have the option of also using it if I want. Also cheaper compared to alternatives

Really liked the performance dashboard features. Very unique. Also the output was very polished, ready to deploy. Did not have to use Supabase - though would like to have the option of also using it if I want. Also cheaper compared to alternatives

I would call it a "documentation generator for MCP". Would be great to see support for LM Studio too. Other than that, it looks like this tool already follows the best practices for MCP docs. For example, here is a demo for web scraping with Tavily: https://mcpshowcase.com/p/mcp-server-directory/tavily-mcp-server The docs on how to connect your MCP to different AI assistants are essential, but also a pain to maintain on your own. Also nice to see the auto-generated use cases and sample chats!

I would call it a "documentation generator for MCP". Would be great to see support for LM Studio too. Other than that, it looks like this tool already follows the best practices for MCP docs. For example, here is a demo for web scraping with Tavily: https://mcpshowcase.com/p/mcp-server-directory/tavily-mcp-server The docs on how to connect your MCP to different AI assistants are essential, but also a pain to maintain on your own. Also nice to see the auto-generated use cases and sample chats!