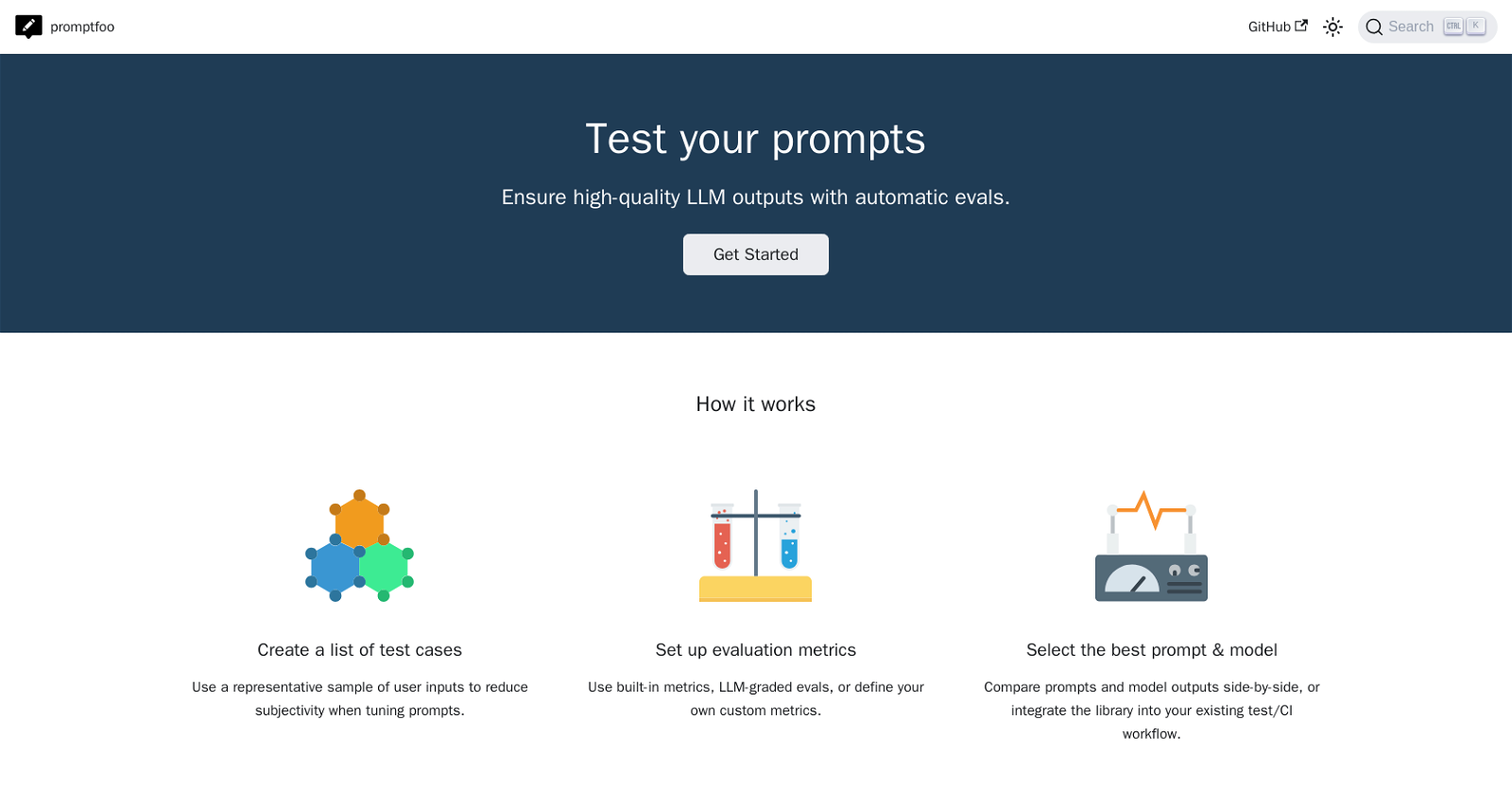

What is the purpose of Promptfoo?

Promptfoo's primary purpose is to evaluate the quality of Language Model Mathematics (LLM) prompts and conduct tests for the same. It provides automatic evaluations to ensure high-quality outputs from LLM models, empowers users to enhance LLM prompts, make informed decisions based on objective evaluation metrics, and facilitates efficient testing.

How does Promptfoo test LLM prompts?

Promptfoo tests LLM prompts by enabling users to create a list of test cases using a representative sample of user inputs. This approach reduces subjectivity in prompt fine-tuning. The users can also set up evaluation metrics, either using the tool's built-in metrics or defining custom metrics of their own.

Can I define custom metrics in Promptfoo?

Yes, Promptfoo allows users to define their own custom metrics. This feature adds flexibility by accommodating unique evaluation standards.

How does Promptfoo reduce subjectivity in fine-tuning prompts?

Promptfoo reduces subjectivity in fine-tuning prompts by allowing users to create a list of test cases using a representative sample of user inputs. This ensures that a wide variety of scenarios are considered during the evaluation process, resulting in a more objective evaluation.

Can I view the comparisons between prompts and model outputs in Promptfoo?

Yes, Promptfoo allows users to view comparisons between prompts and model outputs side by side. This feature aids users in choosing the best prompt and model for their specific needs.

How can I incorporate Promptfoo into my existing test or CI workflow?

Promptfoo can be incorporated into your existing test or continuous integration (CI) workflow seamlessly. This aids in ensuring consistent quality and testing of LLM model prompts within your environment.

Is there a web viewer available in Promptfoo?

Yes, Promptfoo offers a web viewer. This provides flexibility in how users interact with the tool, making it accessible for a broad range of user capabilities.

Does Promptfoo provide a command line interface?

Yes, Promptfoo provides a command line interface in addition to the web viewer. This allows users who prefer or require a more code-centric interaction method to use the tool effectively.

How many users are served by LLM apps using Promptfoo?

LLM applications using Promptfoo serve over 10 million users. This showcases the tool's popularity and wide-spread use in the LLM community.

Can Promptfoo be used to evaluate the quality of AI language model prompts?

Yes, Promptfoo can be used to evaluate the quality of AI language model prompts, ensuring that the prompts yield high-quality outputs.

Is there a representative sample feature in Promptfoo?

Yes, Promptfoo features a representative sample function. Users can create a list of test cases using a representative sample of user inputs, enabling a more comprehensive and objective evaluation.

How can I use Promptfoo to select the best model and prompt for my needs?

With Promptfoo, you can easily select the best model and prompt for your needs by comparing prompts and model outputs side by side. You also have the option to define your own custom metrics or use built-in metrics for evaluation.

How can Promptfoo improve my LLM model outputs?

Promptfoo improves LLM model outputs by ensuring high-quality LLM prompts through systematic testing and evaluation. Its use of representative user input samples and customizable evaluation metrics guarantees an optimally tuned model.

Can I create a list of test cases with Promptfoo?

Yes, with Promptfoo, users can create a list of test cases using a representative sample of user inputs. This assists users in thoroughly testing their model under a wide variety of conditions.

What built-in metrics does Promptfoo offer?

Promptfoo offers built-in evaluation metrics that users can leverage in their model evaluation process. Though it doesn't specify what these metrics are, it assures users that they can resort to these metrics for an initial evaluation.

Is Promptfoo a library?

Yes, Promptfoo is a library designed for evaluating and testing LLM prompt quality.

Can I seamlessly integrate Promptfoo into my workflow?

Yes, Promptfoo can be effortlessly integrated into your workflow. It can be incorporated into your existing test or continuous integration (CI) workflow seamlessly, making it a flexible tool for a variety of scenarios.

How popular and reliable is Promptfoo within the LLM community?

Promptfoo is both popular and reliable within the LLM community, given the fact that it is used by LLM applications serving over 10 million users.

Is Promptfoo a trusted tool for testing LLM prompts?

Indeed, Promptfoo is a trusted tool for testing LLM prompts. Its extensive user base and integral role in LLM applications attest to its trustworthiness.

Where can I get started with using Promptfoo?

You can get started with using Promptfoo by visiting their documentation provided on their website. They provide a comprehensive introduction, guides on command line usage and node package usage.