What is BenchLLM?

BenchLLM is an evaluation tool designed for AI engineers. It allows users to evaluate their machine learning models (LLMs) in real-time.

What functionalities does BenchLLM provide?

BenchLLM provides several functionalities. It allows AI engineers to evaluate their LLMs on the fly, build test suites for their models and generate quality reports. They can choose between automated, interactive, or custom evaluation strategies. It also offers an intuitive way to define tests in JSON or YAML format.

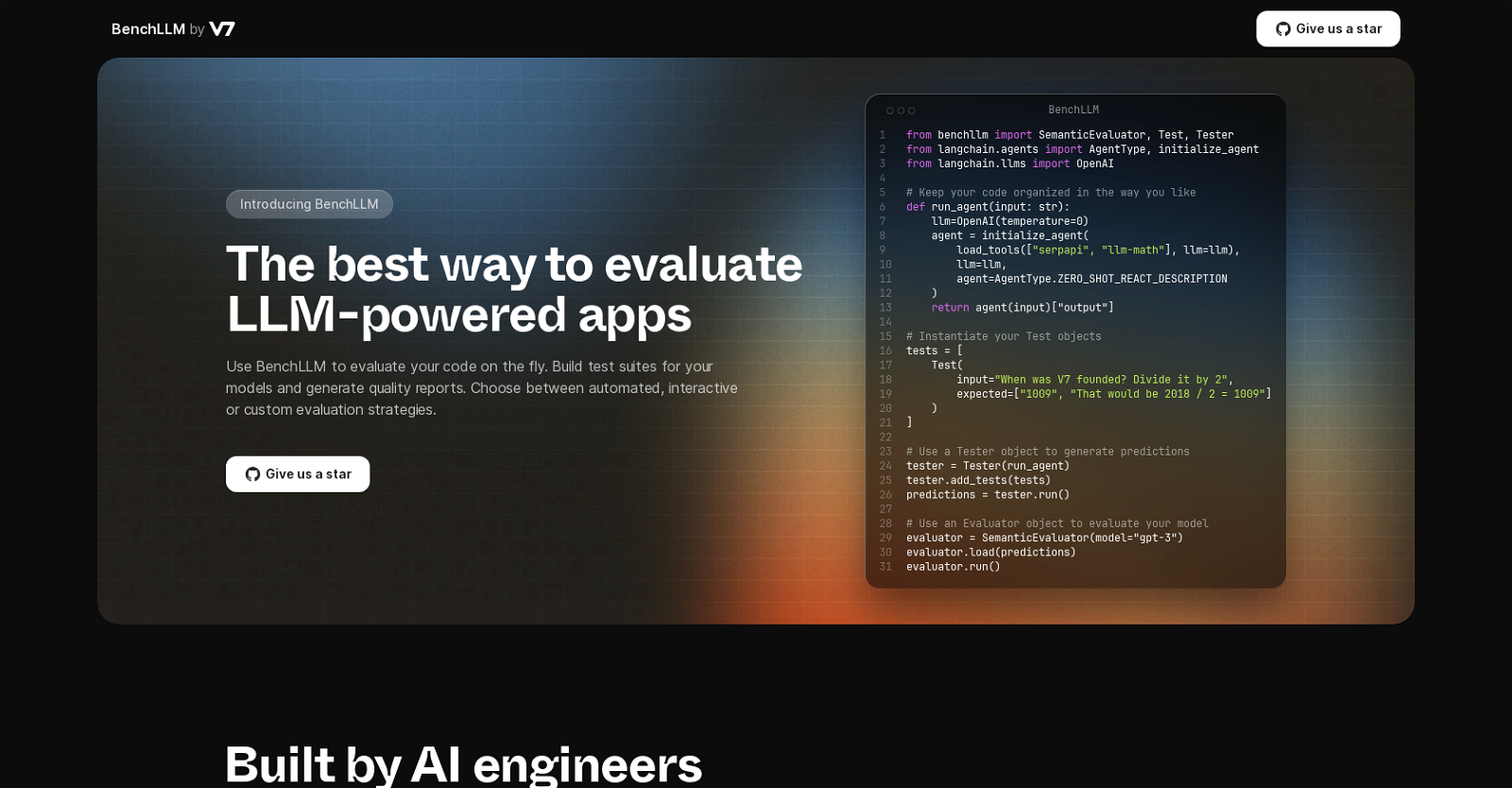

How can I use BenchLLM in my coding process?

To use BenchLLM, you can organize your code in a way that suits your preferences. You initiate the evaluation process by creating Test objects and adding them to a Tester object, these objects define specific inputs and expected outputs for the LLM. Tester object generates predictions based on the input, and these predictions are then loaded into an Evaluator object which uses the SemanticEvaluator model to evaluate the LLM.

What AI tools can BenchLLM integrate with?

BenchLLM supports the integration of different AI tools. Some examples given are 'serpapi' and 'llm-math'.

What does the 'OpenAI' functionality in BenchLLM do?

The 'OpenAI' functionality in BenchLLM is used to initialize an agent, which will be used to generate predictions based on the input given to the Test objects.

Can I adjust temperature parameters in BenchLLM's 'OpenAI' functionality?

Yes, BenchLLM allows adjustment of temperature parameters in its 'OpenAI' functionality. This feature allows engineers to control the deterministic behavior of the models being tested.

What is the process of evaluating a LLM in BenchLLM?

The process of evaluating a LLM involves creating Test objects and adding them into a Tester object. The Tester object generates predictions based on the provided input. These predictions are then loaded into an Evaluator object which utilizes a model, like 'gpt-3', to evaluate the LLM's performance and accuracy.

What do the Tester and Evaluator objects do in BenchLLM?

The Tester and Evaluator objects in BenchLLM play critical roles in the LLM evaluation process. The Tester object generates predictions based on the provided input, whereas the Evaluator object utilizes the SemanticEvaluator model to evaluate the LLM.

What model does the Evaluator object utilize in BenchLLM?

The Evaluator object in BenchLLM utilizes the SemanticEvaluator model 'gpt-3'.

How can BenchLLM help me assess my model's performance and accuracy?

BenchLLM helps assess your model's performance and accuracy by allowing you to define specific tests with expected outputs for the LLM. It generates predictions based on the input you provide and then utilizes the SemanticEvaluator model to evaluate these predictions against the expected outputs.

Why was BenchLLM created?

BenchLLM was created by a team of AI engineers with the objective of addressing the need for an open and flexible LLM evaluation tool. The creators wanted to provide a balance between the power and flexibility of AI and deliver predictable, reliable results.

What are the evaluation strategies offered by BenchLLM?

BenchLLM offers three evaluation strategies: automated, interactive, or custom. It enables you to choose the one that best fits your evaluation needs.

Can BenchLLM be used in a CI/CD pipeline?

Yes, BenchLLM can be used in a CI/CD pipeline. It operates using simple and elegant CLI commands, allowing you to use the CLI as a testing tool in your CI/CD pipeline.

How can BenchLLM help detect regressions in production?

BenchLLM helps detect regressions in production by allowing you to monitor the performance of the models. The monitoring feature makes it possible to spot any performance slippage, providing early warning of any potential regressions.

How can I define my tests intuitively in BenchLLM?

You can define your tests intuitively in BenchLLM by creating test objects that define specific inputs and expected outputs for the LLM.

What formats does BenchLLM support to define tests?

BenchLLM supports test definition in JSON or YAML format. This gives you the flexibility to define tests in a suitable and easy-to-understand format.

Does BenchLLM offer suite organization for tests?

Yes, BenchLLM offers suite organization for tests. It allows you to organize your tests into different suites that can be easily versioned.

What Automation does BenchLLM offer?

BenchLLM enables automation of evaluations in a CI/CD pipeline. This feature allows regular and systematic evaluation of LLMs, ensuring that they are always performing at their optimal level.

How does BenchLLM generate evaluation reports?

BenchLLM generates evaluation reports by running the Evaluator on the predictions made by the LLM. The report provides details on the performance and accuracy of the model compared to the expected output.

How does BenchLLM support for OpenAI, Langchain, or any other API work?

BenchLLM provides support for 'OpenAI', 'Langchain', or any other API 'out of the box'. This universality ensures it can integrate with any tool needed in the evaluation process, providing a more holistic and comprehensive assessment of the LLM.