LLMonitor

Overview

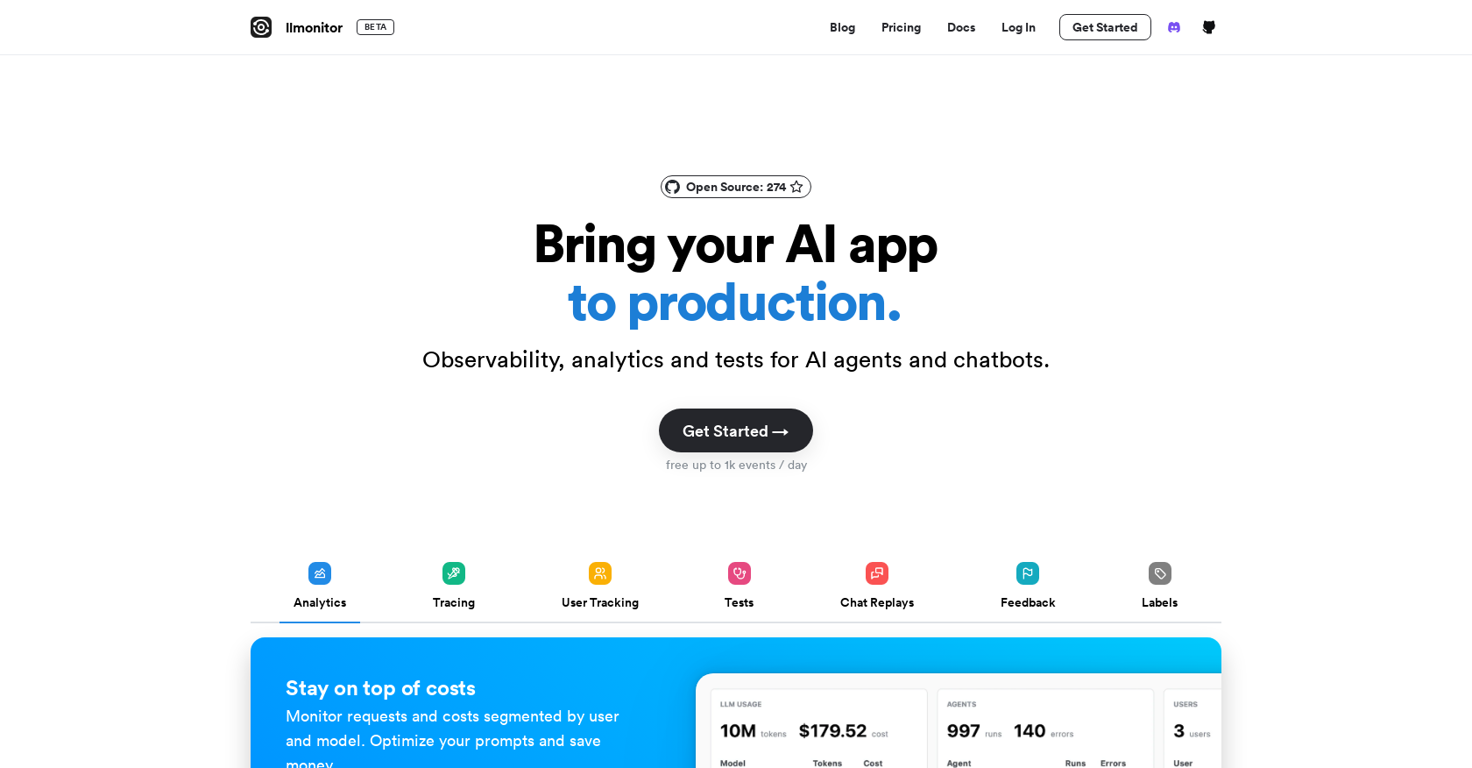

LLMonitor is a comprehensive observability and logging platform designed specifically for AI agents and chatbots built on the LLM framework. With LLManager, developers can optimize their AI applications by gaining insights into their agent's behavior, performance, and user interactions.The platform offers several key features to enhance the developer experience.

Analytics and tracing capabilities allow users to monitor requests and evaluate costs associated with different users and models, helping optimize application prompts and reduce expenses.

LLMonitor also provides the ability to replay and debug agent executions, allowing developers to identify issues and understand what went wrong during agent interactions.In addition, LLMonitor enables users to track user activity and costs and provides visibility into power users.

This feature allows developers to better understand user behavior patterns and align their strategies accordingly. The platform also supports the creation of training datasets, allowing developers to label outputs based on tags and user feedback, thereby improving the quality of their AI models.Furthermore, LLMonitor allows developers to capture user feedback, replay user conversations, and run assertions to ensure that agents function as expected.

The platform offers easy integration with its SDK, allowing developers to quickly incorporate LLMonitor into their applications. LLMonitor can be used either through a self-hosted version or with the hosted version provided by the platform.Overall, LLMonitor provides valuable observability and analytics capabilities specifically tailored to AI agents and chatbots built on the LLM framework, enabling developers to optimize their AI applications and enhance user experiences.

Releases

Top alternatives

-

P G🙏 45 karmaApr 2, 2024@WeboOnboarding was vey easy, I just gave my platform link. Test Strategy and Test cases were automatically generated and I could see the defects. Very easy to use and very useful too