Localai

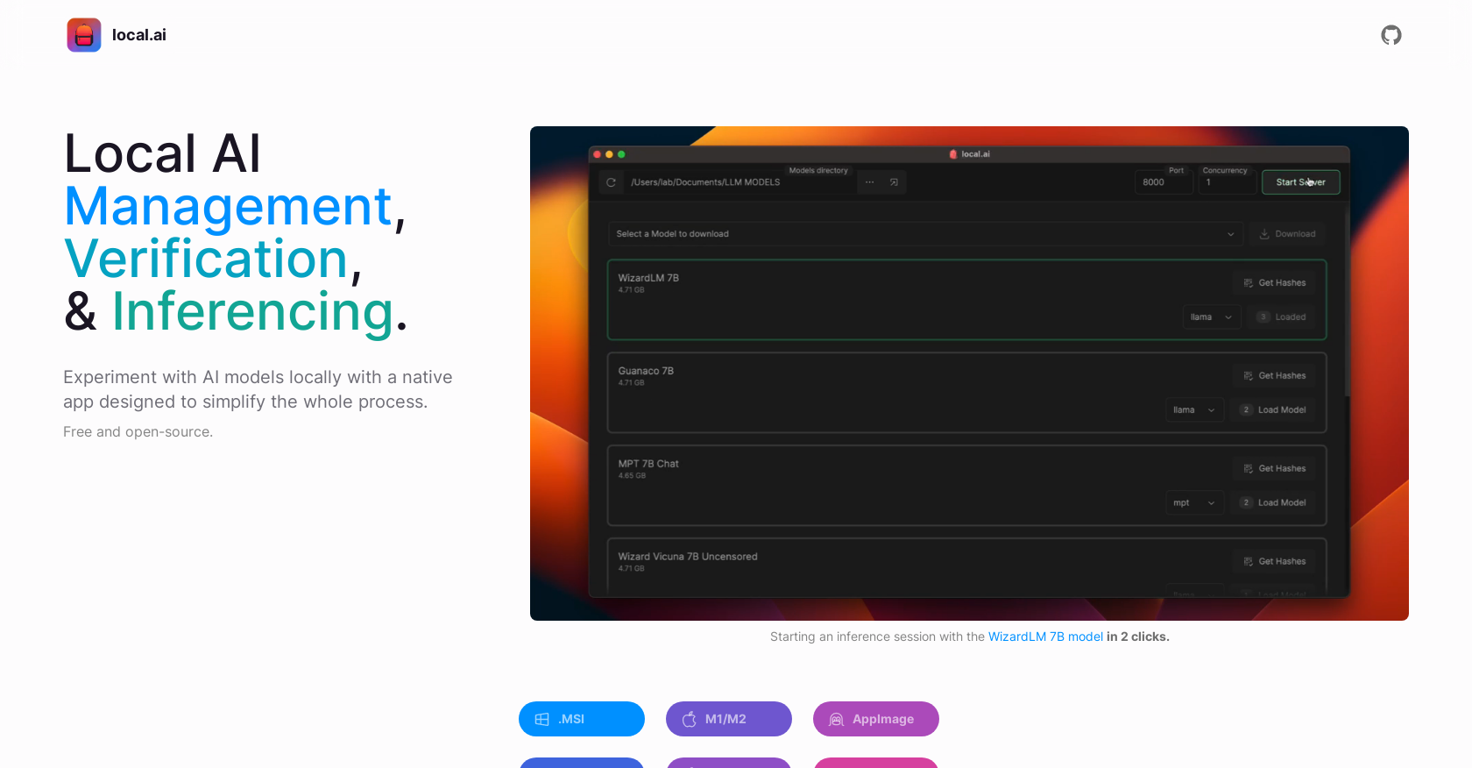

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows users to perform AI experiments without any technical setup, eliminating the need for a dedicated GPU.

The tool is free and open-source. With a Rust backend, the local.ai app is memory-efficient and compact, with a size of less than 10MB on Mac M2, Windows, and Linux.

The tool offers CPU inferencing capabilities and adapts to available threads, making it suitable for various computing environments. It also supports GGML quantization with options for q4, 5.1, 8, and f16.Local AI Playground provides features for model management, allowing users to keep track of their AI models in a centralized location.

It offers resumable and concurrent model downloading, usage-based sorting, and is agnostic to the directory structure.To ensure the integrity of downloaded models, the tool offers a robust digest verification feature using BLAKE3 and SHA256 algorithms.

It includes digest computation, a known-good model API, license and usage chips, and a quick check using BLAKE3.The tool also includes an inferencing server feature, which allows users to start a local streaming server for AI inferencing with just two clicks.

It provides a quick inference UI, supports writing to .mdx files, and includes options for inference parameters and remote vocabulary.Overall, the Local AI Playground provides a user-friendly and efficient environment for local AI experimentation, model management, and inferencing.

Releases

Pricing

Prompts & Results

Add your own prompts and outputs to help others understand how to use this AI.

-

24,93432Released 10d agoFree + from $20/mo

-

16,20727Released 1mo agoFree + from $32.68/mo

Quick update: Verify is now live on the Microsoft Edge Add-ons store in addition to Chrome. Same one-click resume red flag check in seconds. Same free first-day trial. Now available in whichever browser your team uses most. Recruiters are already catching issues their ATS missed... sometimes on the very first resume they check. If you’ve been meaning to test it, this is your sign → https://microsoftedge.microsoft.com/addons/detail/hiiappghgofoiionoeloemlkcankbpka

Quick update: Verify is now live on the Microsoft Edge Add-ons store in addition to Chrome. Same one-click resume red flag check in seconds. Same free first-day trial. Now available in whichever browser your team uses most. Recruiters are already catching issues their ATS missed... sometimes on the very first resume they check. If you’ve been meaning to test it, this is your sign → https://microsoftedge.microsoft.com/addons/detail/hiiappghgofoiionoeloemlkcankbpka

Pros and Cons

Pros

View 17 more pros

Cons

View 5 more cons

3 alternatives to Localai for LLM testing

-

The low-code platform for testing AI apps2,47620Released 2y agoFree + from $99/mo

The low-code platform for testing AI apps2,47620Released 2y agoFree + from $99/mo -

Evaluate LLMs and generate quality reports1,60214Released 2y ago100% Free

-

Build trustworthy AI: Test LLM apps for robustness and compliance.2403Released 1y agoNo pricing

Q&A

If you liked Localai

Verified tools

-

3,28537Released 1y agoFree + from $39/mo

-

9,779108Released 1y ago100% Free

How would you rate Localai?

Help other people by letting them know if this AI was useful.