LightGPT

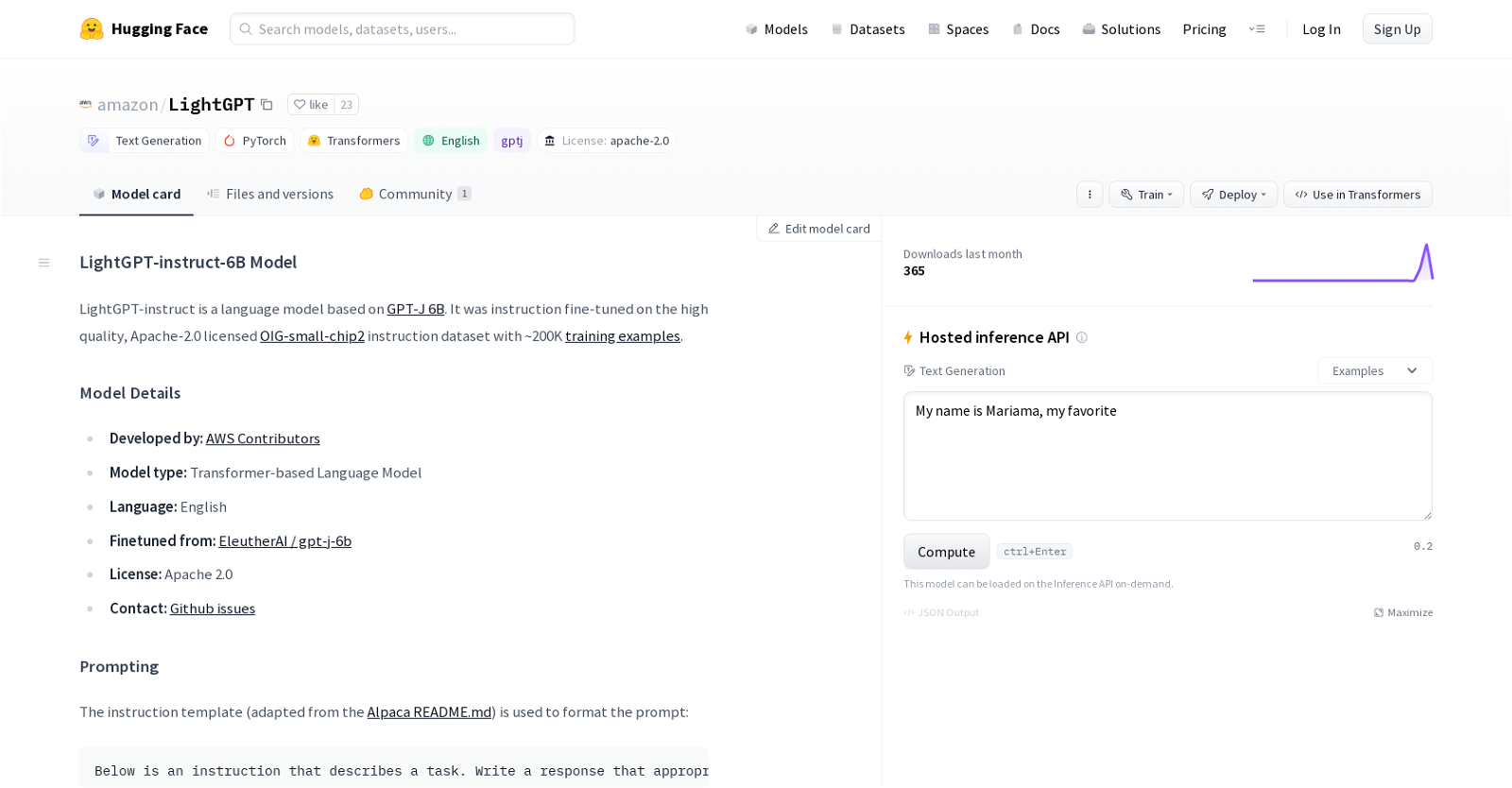

LightGPT-instruct-6B is a language model developed by AWS Contributors and based on GPT-J 6B. This Transformer-based Language Model has been fine-tuned on the high-quality, Apache-2.0 licensed OIG-small-chip2 instruction dataset containing around 200K training examples.

The model generates text in response to a prompt, with specific instructions formatted in a standard way. The response is indicated to be complete when the model sees the input prompt ending with ### Response:\n.The LightGPT-instruct-6B model is solely designed for English conversations and is licensed under Apache 2.0.

The deployment of the model to Amazon SageMaker is facilitated, and an example code is provided to demonstrate the process. The evaluation of the model includes metrics like LAMBADA PPL, LAMBADA ACC, WINOGRANDE, HELLASWAG, PIQA, and GPT-J.

The documentation warns of the model's limitations, including its failure to follow long instructions accurately, giving incorrect answers to math and reasoning questions, and the model's occasional tendency to generate false and misleading responses.

It generates responses solely based on the prompt given, without any contextual understanding. Thus, the LightGPT-instruct-6B model is a natural language generation tool that can generate responses for a variety of conversational prompts, including those requiring specific instructions.

However, it is essential to be aware of its limitations while using it.

Would you recommend LightGPT?

Help other people by letting them know if this AI was useful.

Feature requests

25 alternatives to LightGPT for Large Language Models

Pros and Cons

Pros

Cons

Q&A

If you liked LightGPT

Help

To prevent spam, some actions require being signed in. It's free and takes a few seconds.

Sign in with Google