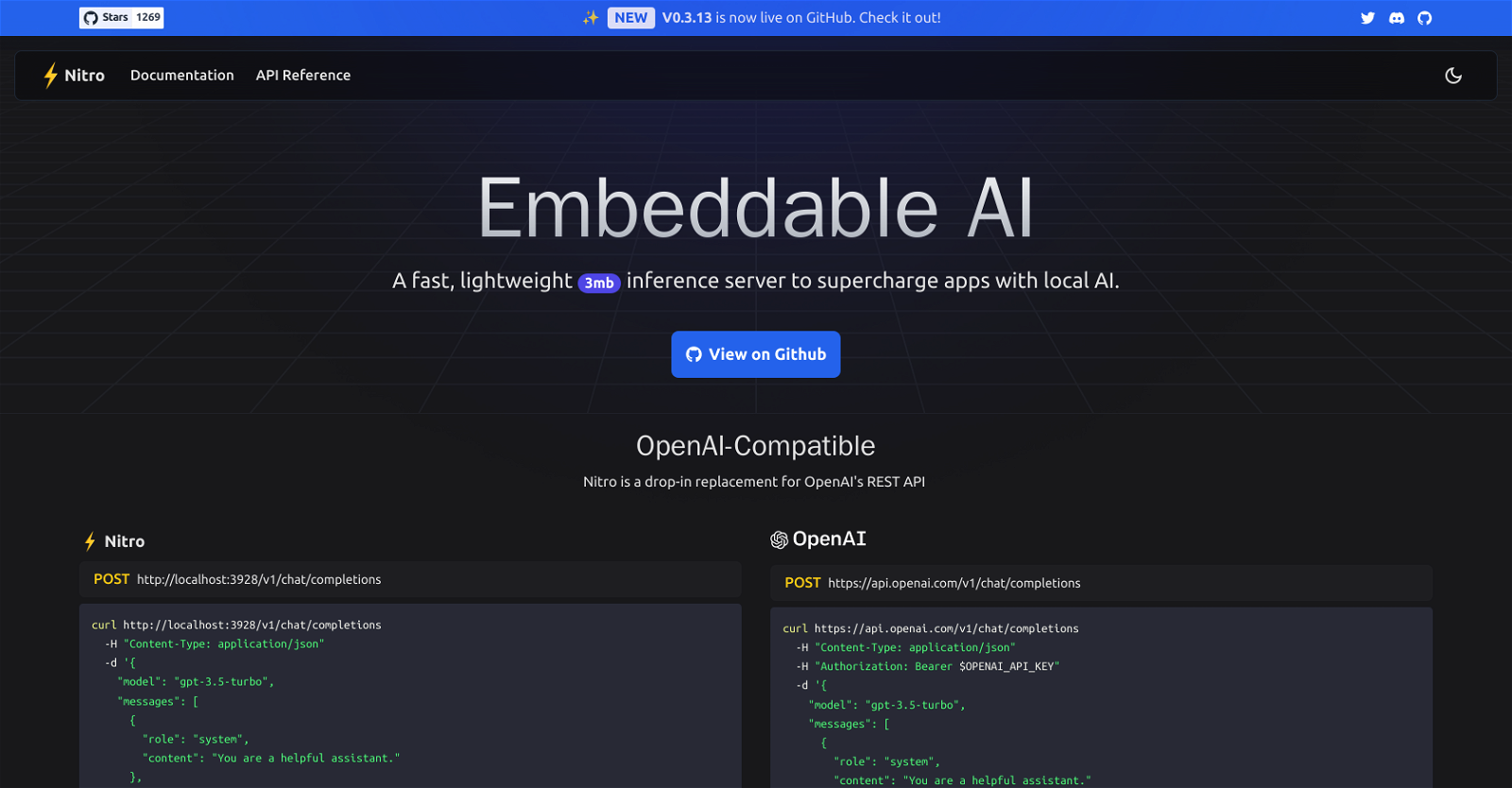

What is Nitro?

Nitro is a highly efficient C++ inference engine primarily developed for edge computing applications. It serves as a fast, lightweight server that bolsters applications with local AI capabilities. Light and embeddable, Nitro is perfect for product integration.

How does Nitro integrate with other applications?

Nitro can easily be embedded into applications to provide local AI functionality. It's designed to be compatible with OpenAI's REST API, making it a viable drop-in replacement. Additionally, Nitro can be quickly set up as an npm, pip package, or binary to integrate it with other applications.

Is Nitro open-source?

Yes, Nitro is open-source. It operates as a 100% open-source project under the AGPLv3 license.

What languages does Nitro support?

Nitro primarily supports C++. This choice of language contributes greatly to its high efficiency and flexibility in operation.

How is Nitro compatible with OpenAI's REST API?

Nitro provides an endpoint that is compatible with OpenAI's REST API, making it a drop-in replacement. This means you can make requests to Nitro in the same way as you would to OpenAI's REST API.

What CPU and GPU architectures is Nitro compatible with?

Nitro is developed to run on diverse CPU and GPU architectures, ensuring cross-platform compatibility. This reflects Nitro's operational and architectural flexibility.

What AI libraries does Nitro integrate with?

As part of its innovative approach, Nitro integrates top-tier open-source AI libraries. This proves its versatility and adaptability in handling different AI functionalities.

What AI capabilities will Nitro support in the future?

Future updates for Nitro involve the integration of AI capabilities such as think, vision, and speech. These enhancements indicate Nitro's continuous commitment to expanding its AI capabilities.

How easy is it to set up Nitro?

Nitro's setup process is extremely quick. It's designed to be user-friendly and is available as an npm, pip package, or binary, giving developers various options for installation.

How can I get Nitro as an npm or pip package?

Nitro can be obtained as an npm or pip package through npm or pip install commands respectively. Given its accessibility and quick setup time, Nitro presents a convenient option for developers seeking to incorporate local AI functionalities.

What license is Nitro under?

Nitro is licensed under the AGPLv3 license. This license suggests Nitro's dedication towards a community-driven AI development approach.

What is the architectural structure of Nitro?

Nitro's architectural structure is primarily designed for efficiency and versatility. The architecture allows Nitro to run on multiple CPU and GPU architectures, enabling cross-platform compatibility. This operational and architectural flexibility is part of what makes Nitro an effective tool for AI integration.

What kind of applications can benefit from Nitro?

Applications that require efficient AI functionality, particularly edge computing applications, can benefit from Nitro. Thanks to its lightweight nature, speed, and embeddability, Nitro is ideal for supercharging apps with local AI capabilities.

How lightweight is Nitro compared to similar tools?

Nitro is presented as an exceptionally lightweight tool compared to similar tools. It is just 3MB, which positions Nitro as a highly compact solution for app developers seeking to run local AI without significantly increasing their application's size.

What functions does Nitro provide for local AI implementation?

Nitro provides a lightweight inference server that supercharges applications with local AI capabilities. This allows developers to integrate AI functionalities efficiently into their applications.

What type of CPU and GPU architectures is Nitro adaptable to?

Nitro is adaptable to a wide range of CPU and GPU architectures. It's designed to ensure cross-platform compatibility, making it a flexible and versatile tool for different hardware setups.

What are the future updates planned for Nitro?

Future updates planned for Nitro include the integration of additional AI capabilities such as think, vision, and speech. These updates show Nitro's ongoing commitment to enhancing its variety and quality of AI services.

How can Nitro supercharge apps with local AI?

Nitro supercharges apps with local AI by serving as a fast, lightweight inference server. It enables efficient integration of AI functionalities at a local level, leading to improved performance and capability of the applications.

What are the system requirements for running Nitro?

While exact system requirements are not specified, Nitro is designed to operate on diverse CPU and GPU architectures indicating a broad compatibility with different system configurations.

What is edge computing in the context of Nitro?

In the context of Nitro, edge computing refers to the deployment and execution of AI functionalities locally on devices (the 'edge' of the network) instead of relying on a central server or cloud resources. Nitro is designed primarily for these edge computing applications, providing a lightweight and efficient tool to integrate local AI within applications.