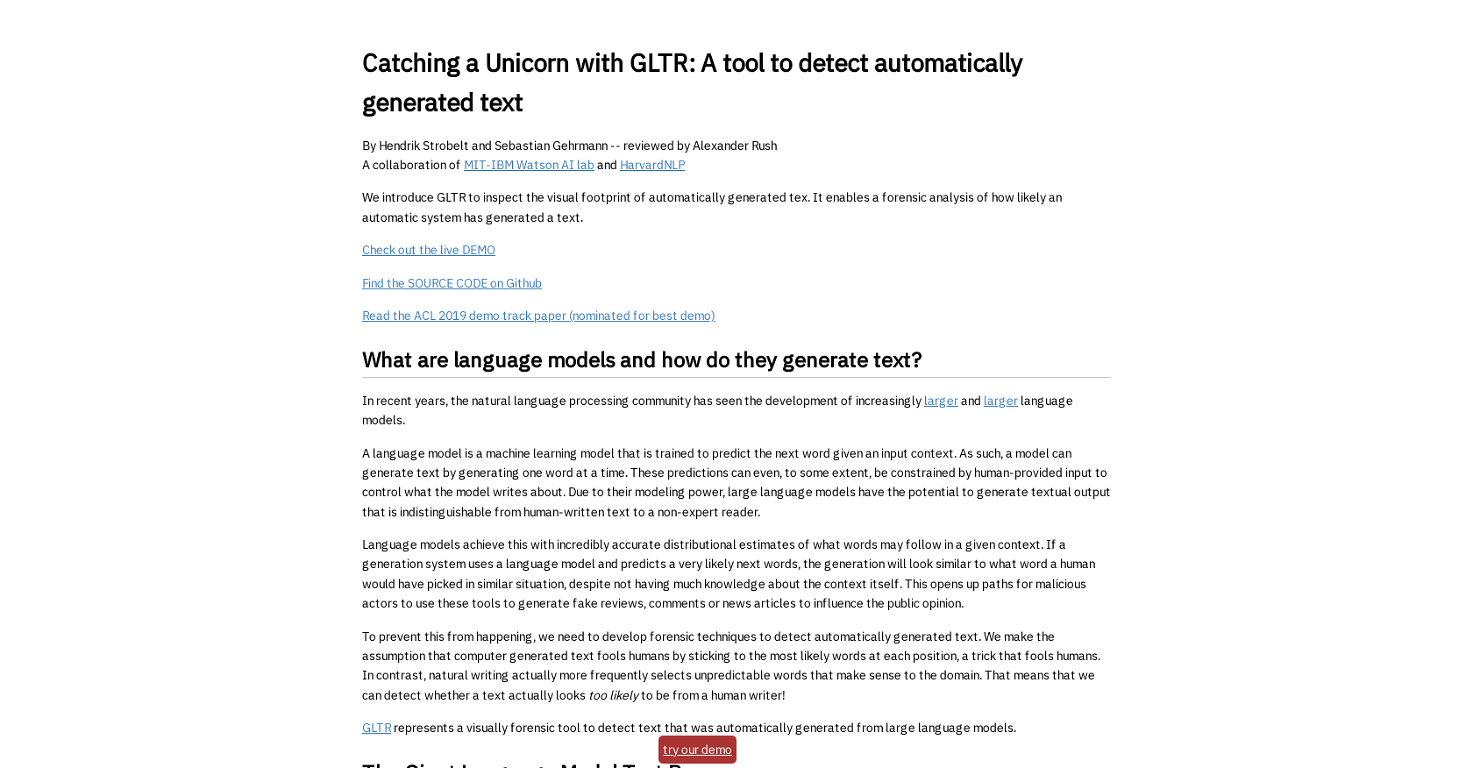

What is GLTR?

GLTR, or Giant Language model Test Room, is an analytical tool developed for detecting automatically generated text. It primarily operates by examining the 'visual footprint' of the text and assists in ascertaining whether an automatic system has generated the content.

Who developed GLTR?

GLTR was developed by a joint venture between the MIT-IBM Watson AI lab and HarvardNLP.

How does GLTR detect automatically generated text?

GLTR detects automatically generated text by analyzing how likely it is a language model has produced the text. It uses language models like GPT-2 117M language model from OpenAI to analyze textual input and predict what GPT-2 might have generated at each position. It also presents a colored mask overlay to represent the probablility of each word being used based on the model.

What is the role of the GPT-2 117M language model in GLTR?

The GPT-2 117M language model plays a key role in GLTR's operations. GLTR analyzes textual input and evaluates what GPT-2 might have predicted at each position, which helps in determining whether a text has been artificially generated.

How does GLTR visually analyze text output?

GLTR visually examines the output via colored word overlays and histograms. Each word is ranked according to the likelihood of its production by the GPT-2 language model, with different colors representing varying degrees of likelihood. The histograms aggregate information regarding word likeliness, prediction ratio between top predicted word and next word, and prediction entropy distribution across the analyzed text.

What do the different color highlights in GLTR represent?

The different color highlights represent the varying degrees of likelihood of words being produced by the language model. Words within the top 10 most likely words are highlighted in green, those within the top 100 are in yellow, and those within the top 1,000 are in red. All other words are in purple.

What is the significance of the histograms in GLTR?

The histograms in GLTR amplify the detection process by aggregating entire text information. The first histogram shows the count of each category of words in the text. The second illustrates the ratio between the probabilities of the top predicted word and subsequent word. The third displays the distribution across the probability entropies of the predictions. This combined insight supports the evidence of whether a text has been machine-generated.

Can GLTR be used to detect fake reviews and news articles?

Yes, GLTR can be used to detect fake reviews, comments, and news articles that have been artificially generated by substantial language models.

How can I access GLTR?

GLTR is accessible to users through a live demo.

Is the source code for GLTR available?

Yes, the source code for GLTR is open-source and accessible on Github.

What is the 'visual footprint' that GLTR uses for detecting generated text?

The 'visual footprint' that GLTR uses for detecting generated text comprises a colored overlay mask that indicates the probability of each word given its position in the text, suggests how likely each word was predicted by the language model.

What does the colored overlay mask in GLTR indicate?

The colored overlay mask in GLTR provides a direct visual indication of how likely a word was predicted under the model. Words ranked within the top 10, 100, and 1,000 most likely words are highlighted in green, yellow, and red, respectively. The remaining words are highlighted in purple.

How does GLTR provide additional evidence of artificially generated text?

GLTR provides additional evidence of artificially generated text by showcasing three histograms related to the whole text. These graphs denote how many words of each category appear in the text, the ratio between the probabilities of the top predicted word and the next word, and the distribution over the prediction entropies. These insights collectively provide a stronger, more conclusive signal of synthetic text.

Are there limitations to the effectiveness of GLTR?

While GLTR offers advanced forensic text analysis capabilities, there are limitations to its effectiveness. It works best on an individual text basis, and might struggle to automatically detect large-scale language model hobbyism. Furthermore, its performance largely depends on the user's comprehensive understanding of the language in question to evaluate whether an unusual word makes sense in a given context.

How does GLTR use large language models to analyze textual input?

GLTR uses large language models, such as the GPT-2 117M from OpenAI, to examine textual input and gauge what the language model might have predicted at each position. Its methodology involves using the same language models that are used to generate fake text to also detect it. This way, the tool can sort the words according to their likelihood of being produced by the model, providing crucial insights into whether a text was artificially generated.

How can GLTR help in cyber security and AI ethics?

GLTR contributes to cyber security and AI ethics by providing a way to detect automatically generated text, which can be used maliciously to generate fake reviews, comments, or news articles. By identifying whether a text has been artificially generated, it becomes easier to uncover potential misinformation or manipulation attempts, thereby promoting transparency and ethical use of AI in textual data applications.

How does GLTR rank words according to their likelihood of being produced by a language model?

GLTR ranks words based on their likelihood of being generated by a language model. This is achieved by comparing textual input with predictions from the GPT-2. Words that are most likely to be generated by the model are ranked higher and highlighted in various colors depending upon their ranking - green for the most likely (top 10), followed by yellow and red, while the rest are highlighted in purple.

What happens when you hover over a word in the GLTR display?

When you hover over a word in the GLTR display, a small box presents the top 5 predicted words, their associated probabilities, as well as the rank of the succeeding word. This exercise gives further insights into what the model might have predicted.

What does GLTR mean by 'too likely' to be from a human writer?

'Too likely' to be from a human writer, as per GLTR, refers to the hypothesis that computer generated text often adheres to highly probable words at each position, which makes the text appear convincingly human authored. Conversely, natural human writing exhibits a higher frequency of unpredictable yet contextually appropriate words, that make the content less likely to be computer generated.

How does GLTR use the uncertainties of predictions in its analysis?

GLTR employs prediction uncertainties in its analysis to understand the model's confidence in each prediction. Uncertainties are obtainable from the language model's entropy, which is then used to construct one of GLTR's histograms. Lower uncertainty signifies the model had strong confidence in a particular prediction, whereas higher uncertainty suggests a lack of confidence. Observing this can offer further insights to distinguish human-written text from machine-generated ones.

211

211 214

214 1

1